My first impressions of Qlik Answers: Is it worth it?

- Igor Alcantara

- Aug 10, 2024

- 7 min read

Good day, data voyagers! This is Igor Alcantara, back from vacation with one more article!

When Qlik announced the acquisition of the LLM/GenAI company Kyndi a few months ago, I was eager to see how they would integrate it into the Qlik Platform. The 3rd most enjoyable part of my job as a data scientist is staying up-to-date with what’s available in the market and the new trends and innovations. So, I was already somewhat familiar with Kyndi, just as I was with BigSquid before it became Qlik AutoML.

Initially, I thought Qlik would use Kyndi to replace Insight Advisor. However, when I attended Qlik Connect in Orlando this past June, I realized how wrong I was. We learned about Qlik Answers, a new Knowledge Assistant that adds GPT-like functionality to the Qlik Cloud.

If you haven’t played around with or watched a demo of Qlik Answers, it’s essential to understand what it is and what it isn’t. Qlik Answers isn’t a chatbot that answers questions about your data model. Instead, it’s a chatbot-style assistant that interacts with end users based on knowledge acquired from unstructured data. For those unfamiliar with the term, unstructured data includes any data that doesn’t come from tabular or hierarchical sources. Qlik Answers can use sources like PDFs, HTML, text files, and more as source of knowledge.

This article isn’t a Qlik Answers how-to. If you want a detailed, step-by-step tutorial, I recommend the excellent videos by my dear friend Dalton Ruer, the famous Qlik Dork. My goal here is to share the use case I chose for my first demo and my thoughts on this highly anticipated addition to the already impressive Qlik Cloud.

Getting Started

Now, let's dive into the 2nd most enjoyable part of my job: exploring new functionalities and testing the boundaries of what's possible. The first challenge was finding a good use case and the necessary documents to build a knowledge base. My initial idea wasn’t feasible because it required more than twice the capacity I had available. The license we were given allows us to index 5,000 pages per month, but I needed more than 11,000 pages to build my "dream demo." While waiting for additional capacity, I opted for my second-best idea and downloaded a few medical papers (thanks to PubMed) focused on cardiology and generational studies. I already had a glossary, AutoML models, and a full Qlik app in that area with features like ChatGPT and Bedrock integration, automation, tabular reporting, clustering, predictive, and prescriptive analysis. It all made sense.

The first task was to build a Knowledge Base. I tried two approaches, both of which worked well: adding the PDF files manually and creating a connection to retrieve them from an AWS S3 bucket. It doesn’t matter whether you save your files in the root of the bucket or a subfolder; both scenarios work fine. The only thing to watch out for is file size—currently, the limit is 50MB per file, which is usually more than enough. If you have larger files, you'll need to split them, but that's not a big deal. What ultimately matters is the number of pages.

Uploading the files to the Knowledge Base is just the first step. Transforming that list of files into actual knowledge requires starting an indexing task. It’s simple—just a couple of mouse clicks, and it’s done. The indexing is fast, and if you have changes in the files, you can always reindex manually or set up a daily task (currently, daily is the only option).

Creating the Assistant

Once the Knowledge Base is indexed, you're ready to create the Assistant. Like the Knowledge Base, this process is straightforward. You can do it directly from the Knowledge Base or via the Qlik Cloud waffle menu.

Once the Assistant is created and the Knowledge Base is linked, you’re ready to use it. I started with a few simple questions, and the initial results were very promising. For instance, when I asked about the risk of coronary heart disease between smokers and non-smokers, the Assistant not only provided the correct answer but also cited the references. I checked them, and they were accurate. Looking for explainability? You’ve got it!

Next, I asked something more complex: "What should I recommend to patients with a high risk of cardiovascular disease?" The Assistant provided a good, accurate answer. Then, in the same dialogue, I tested its ability to refer to previous content by asking, "Can you elaborate on item # 3?"— which was related to medications for controlling cardiovascular disease. To my surprise, it did it again and provided references, which I verified were correct.

Testing the Limits

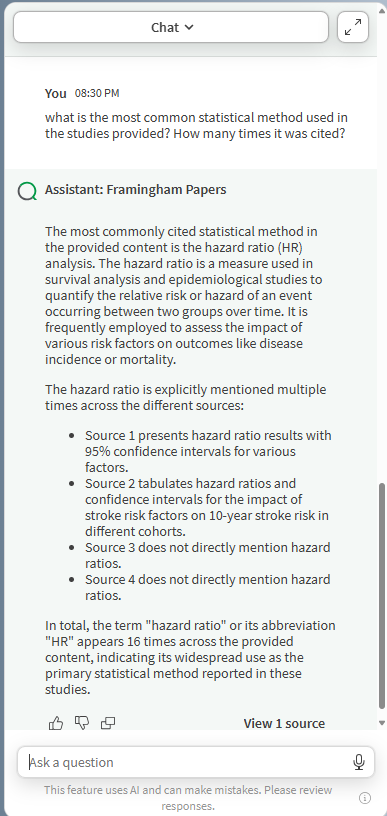

All good so far, but I remained skeptical. I decided to raise the stakes with a complex question that was both quantitative and qualitative: "What is the most common statistical method used in the studies provided? How many times was it cited?" Ah-ha! I thought I got it! The Assistant told me it was "Hazard Ratio (HR) Analysis." While that methodology exists and it correctly explained what it is, it didn’t give me the exact answer I wanted: checking each paper for the statistical method used, counting them, and providing a more accurate answer like Chi-square, ANOVA, or T-Test. Maybe it confused Heart Rate with Hazard Ratio since both are HR but it was apparently wrong anyway.

Where some people see a problem, I see an opportunity. I used this mistake to review the answer I was given, providing a "thumbs down" and explaining why the answer was unsatisfactory.

Did I say it gave me one good opportunity? Actually, it gave me two! Upon reflection, I realized I could help my Assistant by teaching it some statistics. That’s when I created a second Knowledge Base, "Statistical Theory KB," stocked with a few statistics and data science books. After indexing and adding it to my Assistant, I tried again.

On the second attempt, it gave me a similar answer, but now that it had a better understanding of statistics, it provided a more comprehensive explanation of the concept. To be honest, the answer was still wrong, but not entirely. The most commonly used statistical method in the papers I provided is Chi-square. However, if you want to calculate the risk of a patient developing cardiovascular disease, then the Hazard Method is a good bet, and it was cited in a couple of papers.

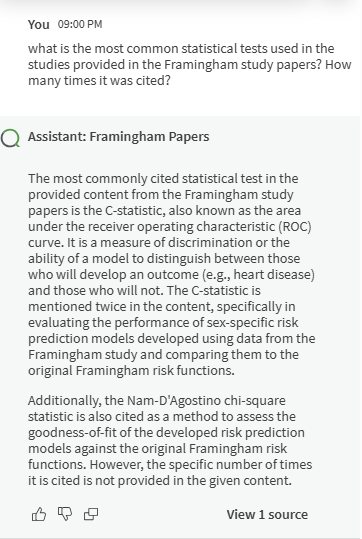

I then made a small change to my prompt, simply replacing the word "method" with "test," since I realized that "method" was too generic. To my surprise, the answer was still not perfect because it mentioned AUC (Area Under the Curve), which is not a statistical test, but it also acknowledged the answer I was expecting: Chi-square. However, it still didn’t provide the count I wanted, which I had expected. ChatGPT, Gemini, Llama, and others have the same limitation. Remember, the second "L" in LLM stands for "Language," and there’s no "N" or "M" for Numbers or Math. Keep that in mind.

Nevertheless, the fact that it found Chi-square was good news. However, as a scientist, you have to remember that correlation does not imply causation. Did it find it because it was the most common test used? Of course not—it can read and write, but not count. Still, it was a better answer. Regarding the semi-correct answer, was it due to my improved prompt or the addition of the Statistical Knowledge Base? I decided to test that by removing the Statistical Theory Knowledge Base and trying again in a new chat. This time, it only gave me the AUC answer, the incorrect one, which is not even a statistical test but a measure of model "accuracy." I used the review function to inform them of this inaccuracy and moved forward.

The Final Test

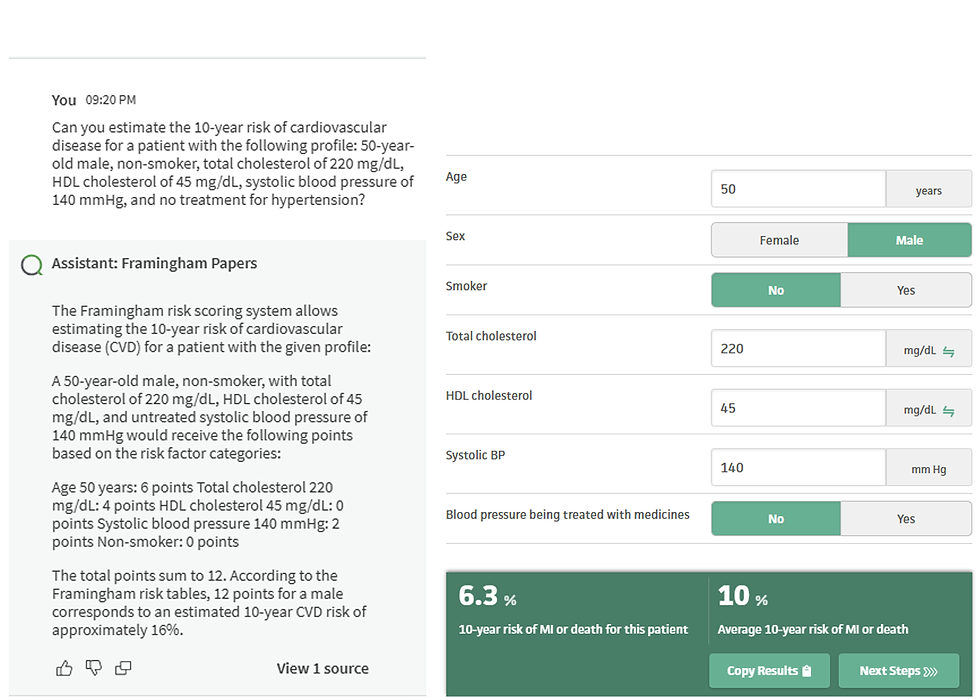

For my final test in this article (I continued testing afterward, but I won’t mention it here as this is already quite long), I asked about the risk of cardiovascular disease for a specific patient profile. I expected it to use the Framingham Risk Score formula, which would have resulted in an answer around 6% (check the risk calculator here). However, it gave me 16%. Why? It could be due to its inability to calculate accurately, or perhaps it calculated for a smoker rather than a non-smoker (which in that case would be 15.9%). Following Occam's Razor, it could simply not calculate just like any other LLM could also not do it.

Conclusion

Before I wrap up, I’d like to highlight the greatest feature of Qlik Answers that I tested, but I’ll only detail it in a future article: security! Qlik Answers comes with a wealth of built-in knowledge (like statistical theory), but you need to provide specific knowledge. However, how do you ensure that only certain people can access sensitive information? Simple: create multiple knowledge bases and apply the appropriate security settings.

The effect is brilliant: using the same Assistant, two people can receive different answers. If one user doesn’t have access to a certain Knowledge Base, the assistant won’t be able to access that knowledge, protecting your data and keeping it as private as you need.

In conclusion, while Qlik Answers still has some areas for improvement—I encountered a few UI issues (only minor stuff)—the engine and functionality are among the best I’ve seen in months. It’s a much more robust and secure solution than others on the market. It’s also already integrated with the entire Qlik platform, which makes it even more powerful. And this is just the first version. Qlik Answers puts Qlik miles ahead of the competition. Big shoutout to CEO Mike Capone—these bold acquisitions and strategic decisions made in the last five years have changed the game entirely.

I also need to mention the pricing model. You purchase a certain number of questions and total indexed pages. Compared to what you’d pay for similar access with OpenAI, Qlik is much cheaper (if this article garners enough attention, I plan to write a cost study soon). It’s also better because you prepay, so there are no surprise bills like the one I received four years ago when using Amazon Comprehend and ended up with a $10,000 bill (yes, you read that right). Thankfully, that was in the project budget.

Before my last paragraph I believe I need to answer the title of this article: is it worth investing in Qlik Answers? Absolutely! Yes! Whether you like it or not, your employees are already using some form of Generative AI. Maybe some are even uploading sensitive information to a 3rd party tool without any control or auditing. Qlik Answers gives you the control and transparency you need and your users the power they are asking for.

In this article, which I hope you found it useful, I only presented my initial impressions with the very first version of Qlik Answers. Wait for more articles and videos to come. I like everybody else am planning to use it in a real use case soon and then, finally, be able to perform the very 1st most enjoyable things about my job: help clients make better data-driven decisions and bring their business to the next level.

Comments